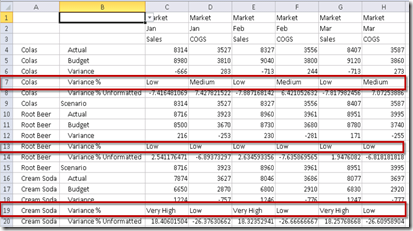

As we all know (or at least the cool kids know) the changes to Smart View have been coming quickly. So fast that the wise at Oracle decided to decouple its releases from the rest of the EPM stack. That is how we are at 11.1.2.5.200 while the rest of the stack is 11.1.2.3. I think this is a good thing as it allows Smart View to be more proactive in introducing changes to help us do more and better reporting. I applaud Oracle for doing this.

However, because the changes are coming so quickly, not everything gets documented very well. From the 11.1.2.5 new features documents we see this.

“Change to Display of Duplicate Variable Names

With this release, Smart View added functionality to display fully qualified variable names when variable names are duplicated. This helps to identify variables defined at the global, application, and database levels. “

But what does it mean? One of the cool features of Smart View is the ability to use substitution variables. You are thinking, what is so cool about that, the add-in could always do it and Smart View could do it since version 9X. Well yes, in both cases you could enter your substitution variable like &CurrMonth and when you retrieved, you would get the value of the substitution variable returned when you refresh the data. With this comes the limitation that if you save the spreadsheet, you save the actual value of the substitution variable and not the substitution variable itself. Huh? What I mean is suppose the substitution variable is set to Mar. It saves Mar and not &CurrMonth.

Starting in Smart View 11.1.2.1.102 a new way to use variables was introduced. HSGETVARIABLE. When used in a worksheet, it would retrieve the value of the variable but keep it as a formulaic member so next month when you changed the variable, your report would update with the new information. Pretty cool!

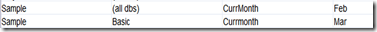

So lets get on to the change. Suppose I have the following substitution variables:

Notice I have both a global and database specific variable with the same name.

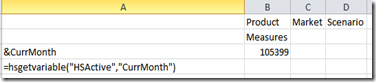

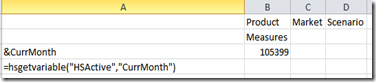

In 11.1.2.3.X and below, I could enter

=HSGetVariable(“HSActive”,”CurrMonth”) and when refreshed, get the variable. Note, HSActive means the current active connection for the sheet, I could also put in a private connection name.

Starting in 11.1.2.5, the command allows you to put in a qualified name

=HSGetVariable(“HSACTIVE”,”Sample.CurrMonth”) to determine the scope of where to get the variable from. If left blank, it seems to get the global variable. If qualified, it picks up the variable from where you specify

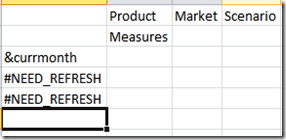

The screenshot above shows what the formulas look like, but in reality when you enter them you get is

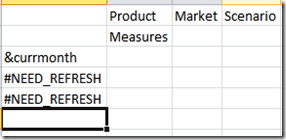

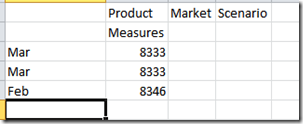

Once you refresh you get

The difference between rows 1 and 2 is Row one has been physically been changed to Mar while row 2 is still a formula. The interesting thing is unless you qualify the application, you will get the global variable.

Further, I’m using HSACTIVE for the connection name, you can actually use a different connection instead. For example if I created a private connection for Demo.Basic called DEMO, I could use it and it would pull from the Demo.Basic version of the variable even if I’m connected to Sample.Basic.

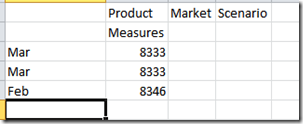

So here is where it is getting more interesting. In 11.1.2.5.200 I tried the same thing. When trying to use a global variable

=HsGetVariable("HSActive","currmth")

I get an error message

and what did not work before supplying both the application and database now does work

=HsGetVariable("HSActive","sample.basic.currmth")

As a test, I deleted the application and database level variables and then tried:

=HsGetVariable("HSActive","currmth")

and now it returns the global variable.

By the way &Currmth stopped working as well.

Between the two versions Oracle development has apparently been refining how this functionality works. So what worked in 11.1.2.5 does not necessarily work the same way in 11.1.2.5.200 and of course different than prior versions.

Anyway this is a good enhancement to substitution variables and I urge you to give it a try.